AI/Journalism Hackathon: Harnessing AI to Enhance Trust and Deepen Engagement in Local Communities

Key takeaways from the AI/Journalism Hackathon at UC Berkeley: Harnessing AI to Enhance Trust and Deepen Engagement in Local Communities.

Artificial Intelligence (AI) will not replace humans in the newsroom, even as emerging technologies continue to rapidly evolve and grow more capable day by day. Instead, new tools will help journalists and others to unlock and share information, more easily scale new media projects and solutions, and become vastly more productive. This was one takeaway from projects launched during a three-day hackathon that brought journalists, documentary filmmakers, technologists, designers and students together to explore how AI can help enhance trust and deepen engagement in local communities.

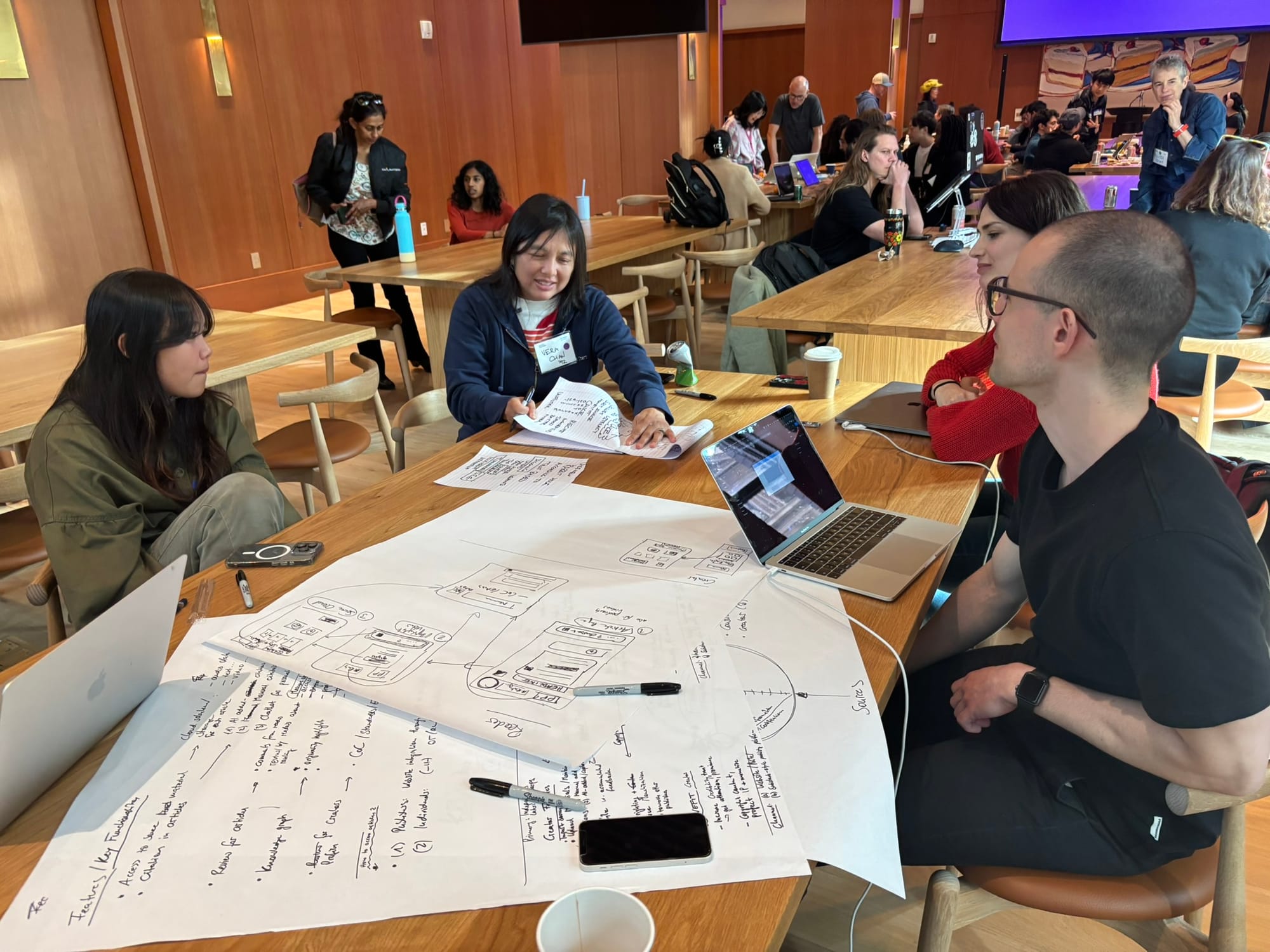

The event was hosted by Hacks/Hackers and UC Berkeley Journalism, with support from MacArthur Foundation, Nancy Blachman and David desJardins, the Philomathia Foundation, and the Documentary Program at Berkeley Journalism. Over the course of a weekend, attendees from throughout the Bay Area pitched original ideas, formed teams, built prototypes and presented projects that ranged from advanced fact-checking to authenticating media and developing new stories, tools and platforms for deeper audience engagement.

While current AI tools can often be hallucinatory, and require some technical know-how in order to develop and deploy, within the next year there will likely be the emergence of functional “AI agents” that will be able to help journalists create innovative audience experiences across media platforms. At the same time, there is still a need to develop and implement guardrails that address bias in AI, and incorrect or misleading results generated due to the inherently probabilistic nature of LLMs.

The hackathon featured a series of panelists who are already working to build AI tools for journalism. A general consensus among the weekend’s presenters is that, rather than AGI being just around the corner, we’re still in the early, exciting days of AI, much like the dawn of the World Wide Web in the mid-90s.

Presenters included four-time Pulitzer Prize winner David Barstow, chair of Berkeley’s Investigative Reporting Program. Barstow is helping lead the Police Records Access Project, which is using AI tools to develop a California-wide database of police misconduct and use-of-force records.

Researchers, students, public defenders, journalists and advocates have filed information requests so far with 700 state police departments across California, securing more than 175,000 files. Combining data science and artificial intelligence, the project will accelerate making sense of and making accessible these records in order to help communities, journalists, public defenders, prosecutors, and police departments develop a deeper understanding of California policing.

Documentary filmmaker and archival producer Rachel Antell discussed the need for transparency when incorporating AI-generated content into journalism. The boom in online streaming services in recent years has resulted in insatiable demand for nonfiction filmmaking. In this flood of documentary content, generative AI has often been used to save time, creating imaginary or even non-existent historical artifacts, potentially muddying the archival record Antell relies on for her documentary work. Together with collaborators, Antell founded the Archival Producers Alliance (APA). The alliance has since released generative AI guidelines for archival producers, as well as other filmmakers.

Meanwhile, David Cohn, senior director of research and development at Advance Local showcased how AI is already being used to improve newsroom processes. Cohn and his team have built a series of custom applications with generative AI that show how every journalists' job could be made easier — for example, automating the repetitive work of checking court websites for updates on cases, or finding content for affiliate posts.

As presenters discussed AI and journalism throughout the weekend, hackathon teams had already started building tools aimed at enhancing audience trust and boosting engagement with local communities. Some early pitches at the start of the hackathon included using AI to make the journalistic fact-checking process more accessible to the public, to building an “interview helper” that listens along and reminds the journalist to ask questions and get all the answers they need for their story.

On Sunday afternoon, the teams presented their hackathon demos. There were four winning teams:

- The DocImpact Toolkit team created a tool for independent filmmakers, who typically have less capacity to promote their creations, leading to less visibility with audiences. In order to help with audience engagement, the team created a demo for an AI-driven PR toolkit for emerging filmmakers. Using AI, the tool creates and executes a PR strategy, with human beings providing oversight and adjustments as needed.

- The Slyce team developed a demo aimed at rebuilding audience trust in media by providing readers with more insights about how journalists create stories. The proposed tool would share article sources, interview transcripts, and iterations in the editorial process, and would allow for public feedback.

- Noting that it can be time-consuming for journalists and the public to keep track of all that happens at the many public hearing in local government, the Community Meetings Chatbot tool “attends” meetings, extracts and publishes information about what went on, and then answers questions about agendas, voting, and what was discussed.

- Cite Unseen, a tool for Wikipedia editors, adds icons to citations on articles to denote the nature and reliability of sources at a glance. The tool has so far categorized 3,400 domains, largely through human effort and some automation. However, there are nearly 30 million citations on English Wikipedia alone, so the hackathon project, which recently received a $20,000 grant from Wikipedia Switzerland, explored how LLMs such as ChatGPT can with help categorizing sources at scale.

While teams collaborated and explored ways to increase newsroom capacity and engage with local audiences, a variety of guest presenters over the course of the weekend considered how to improve trust in AI. Presenters discussed the need to continue to develop and implement “guardrails” that not only address bias and ensure AI is inclusive, but also protect information integrity and credibility.

One presenter suggested that AI has the potential to “become a threat to itself” due to model decay, as it is trained on data over 20–30 generations. As a result, human-generated data – and humans themselves – will continue to be important in the development of AI technologies.

Hacks/Hackers advances media innovation by regularly convening hackathons and other events around the world that bring together people from diverse backgrounds, including journalists, technologists and entrepreneurs.